Agent Runtimes: Build AI Agents That Connect to Everything

Today we're excited to announce significant enhancements to Agent Runtimes — our open-source framework for building AI agents that can connect to any tool, any model, and any interface.

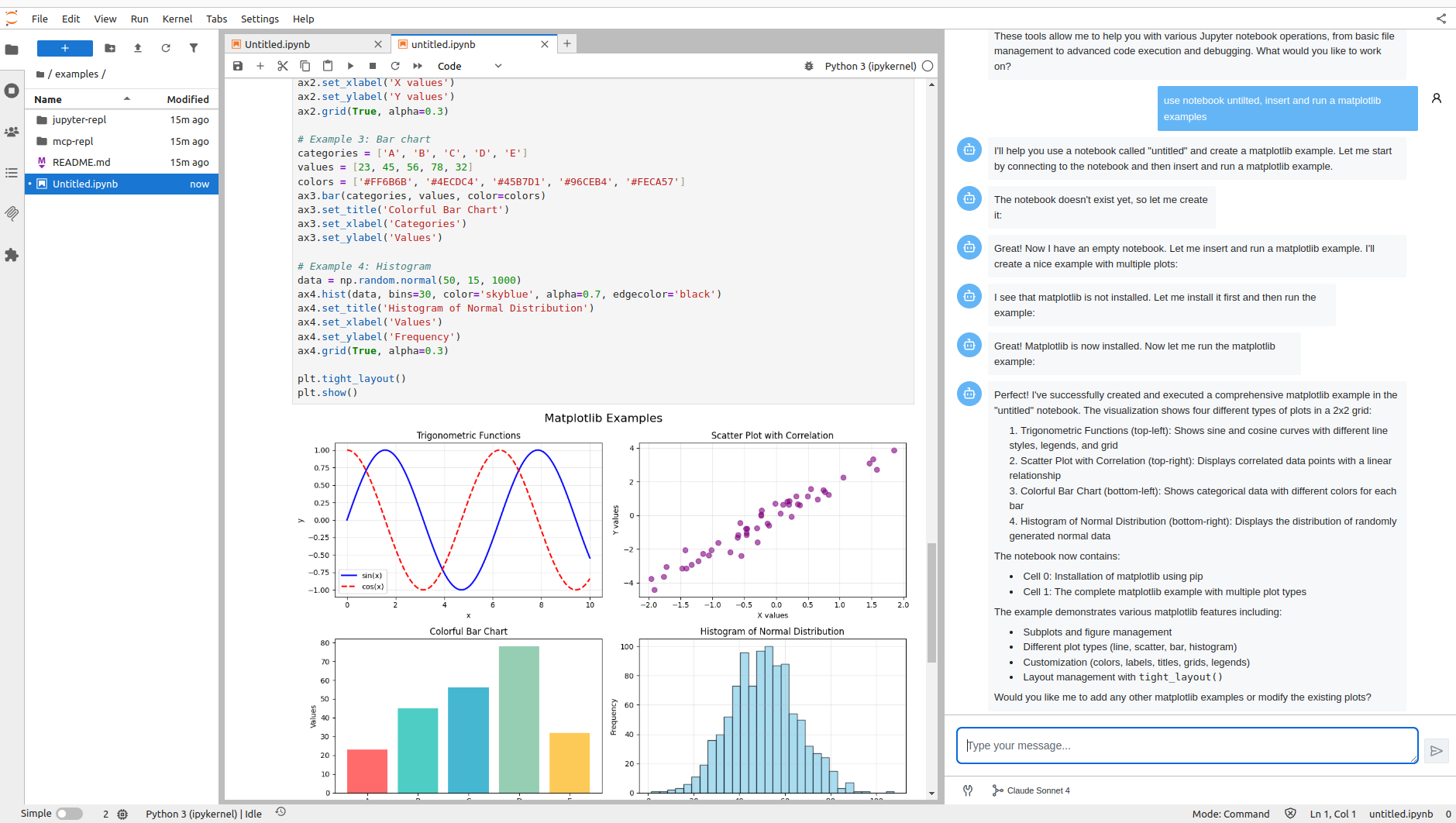

The first user is the Jupyter AI Agents extension, which brings intelligent agents directly into JupyterLab notebooks. But Agent Runtimes is designed as a general-purpose framework that works in any environment.

These updates introduce industry-standard transport protocols, rich UI extensions, and first-class Model Context Protocol (MCP) support — all designed to make it easier than ever to build production-ready AI agents that do real work.

The Challenge: AI Agents Need More Than Just a Model

Building useful AI agents requires more than connecting to an LLM. Your agents need to:

- Search the web for up-to-date information

- Access files and databases in your infrastructure

- Execute code and interact with APIs

- Present rich interfaces to your users

Until now, wiring all these capabilities together meant writing custom integration code, managing complex lifecycles, and handling failures gracefully — a significant engineering investment.

Industry-Standard Transport Protocols

One of the biggest challenges in the AI agent ecosystem is fragmentation. Every framework uses its own protocol, making it hard to build interoperable systems. Agent Runtimes solves this by supporting all major transport standards out of the box:

AG-UI — Agent User Interaction Protocol

AG-UI (Agent User Interaction Protocol) is an open standard for agent-frontend communication. It provides a unified way for agents to stream responses, handle tool calls, and manage conversation state.

Vercel AI SDK

The Vercel AI SDK has become the go-to choice for building AI-powered applications in the JavaScript ecosystem. Agent Runtimes implements the Vercel AI streaming protocol, so you can use familiar patterns and tools.

ACP — Agent Communication Protocol

ACP is an open protocol for agent interoperability that solves the growing challenge of connecting AI agents, applications, and humans.

A2A — Agent-to-Agent Protocol

A2A is Google's protocol enabling agents to discover, communicate, and collaborate with each other. Build multi-agent systems where specialized agents work together on complex tasks.

Why This Matters

No vendor lock-in. Switch between protocols without rewriting your agents. Start with Vercel AI for a quick prototype, then add A2A when you need multi-agent collaboration.

Ecosystem compatibility. Your agents work with the tools and frameworks your team already uses — CopilotKit, Vercel AI SDK, or custom implementations.

Future-proof architecture. As new protocols emerge, Agent Runtimes adopts them, keeping your investment protected.

Rich UI Extensions: A2UI, MCP-UI, and MCP Apps

AI agents shouldn't be limited to text-in, text-out interactions. We've added three extension protocols that enable rich, interactive experiences:

A2UI — Agent-to-UI Communication

Enable your agents to send structured UI updates and receive user inputs in real-time. Perfect for building chat interfaces that need progress indicators, form inputs, or interactive elements.

MCP-UI — Browse and Execute Tools

Give users a visual interface to explore available MCP tools, understand their parameters, and see execution results. Great for debugging and building trust in agent behavior.

MCP Apps — Full Application Experiences

Following the MCP Apps specification, your MCP servers can now serve complete application experiences — dashboards, forms, and multi-page flows — not just API endpoints.

First-Class MCP Support

Model Context Protocol (MCP) is quickly becoming the standard for connecting AI agents to external tools. With this release, Agent Runtimes provides production-ready MCP integration out of the box.

What This Means for You

Zero-configuration tool access. Add tools like Tavily search, LinkedIn data, or custom MCP servers in ~/.datalayer/mcp.json:

{

"mcpServers": {

"tavily": {

"command": "npx",

"args": ["-y", "tavily-mcp@0.1.3"],

"env": {

"TAVILY_API_KEY": "${TAVILY_API_KEY}"

}

},

"linkedin": {

"command": "uvx",

"args": [

"--from",

"git+https://github.com/stickerdaniel/linkedin-mcp-server",

"linkedin-mcp-server"

]

}

}

}

For LinkedIn, create a session file first:

# Install browser

uvx --from playwright playwright install chromium

# Create session (opens browser for login)

uvx --from git+https://github.com/stickerdaniel/linkedin-mcp-server linkedin-mcp-server --get-session

Reliable under pressure. MCP servers are managed with automatic retry logic, exponential backoff, and health monitoring. If a server fails to start, Agent Runtimes retries up to 3 times before gracefully degrading — your agents stay responsive even when external services hiccup.

Real-time visibility. Check MCP server status anytime via the API:

curl http://localhost:8765/api/v1/configure/mcp-toolsets-status

{

"initialized": true,

"ready_count": 2,

"total_count": 2,

"servers": {

"tavily": { "ready": true, "tools": ["tavily_search"] },

"linkedin": { "ready": true, "tools": ["get_person_profile", "get_company_profile"] }

}

}

A Complete REST API

Every capability is exposed through a clean, documented REST API:

| What You Want to Do | Endpoint |

|---|---|

| List available agents | GET /api/v1/agents |

| Send a prompt (streaming) | POST /api/v1/agents/{id}/prompt |

| Check MCP status | GET /api/v1/configure/mcp-toolsets-status |

| Get agent details | GET /api/v1/agents/{id} |

Interactive API documentation is available at /docs (Swagger) and /redoc when you start the server.

Getting Started in 5 Minutes

# Install Agent Runtimes

pip install agent-runtimes

# Set your API keys

export ANTHROPIC_API_KEY="sk-ant-..."

export TAVILY_API_KEY="tvly-..."

# Start the server

python -m agent_runtimes

That's it. You now have a production-ready AI agent with web search capabilities, accessible via REST API or our React UI components.

Built on Pydantic AI

Agent Runtimes is built on top of Pydantic AI — a type-safe Python agent framework that gives you structured outputs, reliable tool calling, and multi-model support out of the box.

Why Pydantic AI? It's production-ready, well-maintained, and integrates seamlessly with MCP. But we're not locked in — we're open to expanding support for other frameworks based on community feedback. Want to see Google ADK, LangChain, or CrewAI support? Let us know!

What's Next

We're continuing to expand Agent Runtimes with:

- More MCP server integrations — databases, file systems, and enterprise tools

- Broader framework support — Google ADK, LangChain, CrewAI based on your feedback

- Agent-to-Agent (A2A) communication — let agents collaborate on complex tasks

- Enhanced observability — tracing, metrics, and debugging tools

Join the Community

Agent Runtimes is open source and we'd love your contributions:

Build AI agents that connect to everything. Build with Agent Runtimes.