Deep Dive into our Examples Collection

In the fast-evolving world of data science and AI, having the right tools and resources is critical for success. As datasets grow larger and computations more complex, data scientists need scalable, flexible, and reliable solutions to perform high-performance analyses. Datalayer allows you to scale your data science workflows with ease, thanks to its Remote Kernels solution. This feature enables you to run computations in powerful cloud environments directly from your JupyterLab, VS Code or CLI.

We have created a public GitHub repository with a collection of Jupyter Notebooks that showcases scenarios where Datalayer proves highly beneficial. These examples cover a wide range of topics, including machine learning, computer vision, natural language processing, and generative AI.

Explore the Datalayer Examples Collection

Here are an overview of the examples available in the Datalayer public GitHub repository. To access the notebooks code, simply click on the links provided.

1. OpenCV Face Detection

This example utilizes OpenCV for detecting faces in YouTube videos. It uses a traditional Haar Cascade model, which may have limitations in accuracy compared to modern deep learning-based models. It utilizes parallel computing across multiple CPUs to accelerate face detection and video processing tasks, optimizing performance and efficiency. Datalayer further enhances this capability by enabling seamless scaling across multiple CPUs.

2. Image Classifier with Fast.ai

This example demonstrates how to build a model that distinguishes cats from dogs in pictures using the fast.ai library. Due to the computational demands of training a model, a GPU is required.

3. Dreambooth

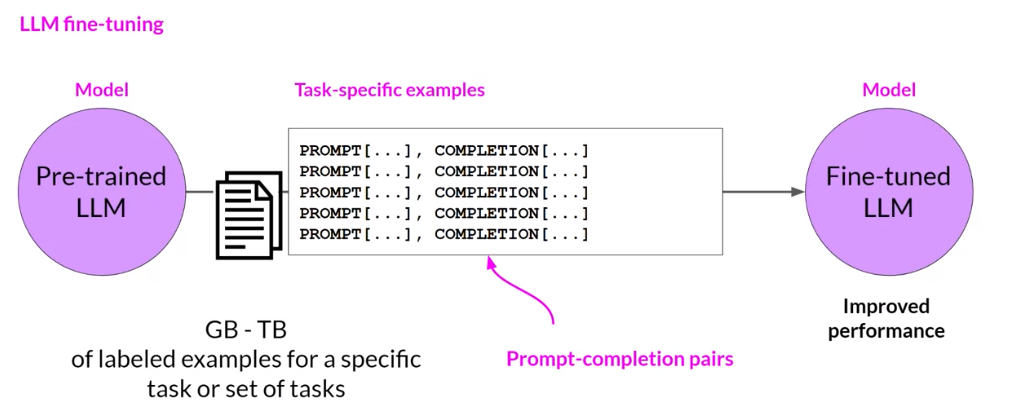

This example uses the Dreambooth method which takes as input a few images (typically 3-5 images suffice) of a subject (e.g., a specific dog) and the corresponding class name (e.g. "dog"), and returns a fine-tuned/'personalized' text-to-image model (source: Dreambooth). To do this fune-tuning process, GPU is required.

4. Text Generation with Transformers

Those notebook examples demonstrate how to leverage Datalayer's GPU kernels to accelerate text generation using Gemma model and the HuggingFace Transformers library.

Transformers Text Generation

This notebook uses Gemma-7b and Gemma-7b-it which is the instruct fine-tuned version of Gemma-7b.

Sentiment Analysis with Gemma

This example demonstrates how you can leverage Datalayer's Cell Kernels feature on JupyterLab to offload specific tasks, such as sentiment analysis, to a remote GPU while keeping the rest of your code running locally. By selectively using remote resources, you can optimize both performance and cost. This hybrid approach is perfect for tasks like sentiment analysis via llm where some parts of the code require more computational resources than others. For a detailed explanation and step-by-step guide on using Cell Kernels, check out our blog post on this specific example.

5. Mistral Instruction Tuning

Mistral 7B is a large language model (LLM) that contains 7.3 billion parameters and is one of the most powerful models for its size. However, this base model is not instruction-tuned, meaning it may struggle to follow instructions and perform specific tasks. By fine-tuning Mistral 7B on the Alpaca dataset using torchtune, the model will significantly improve its capabilities to perform tasks such as conversation and answering questions accurately. Due to the computational demands of fine-tuning a model, a GPU is required.

Getting Started with Datalayer

Whether you're a seasoned data scientist, an AI enthusiast, or a beginner looking to explore new technologies, our Examples GitHub repository is a great starting point. Paired with our Remote Kernels solution, you'll be able to perform cutting-edge data science analysis at scale, without worrying about hardware limitations.

Here's how you can get started:

Explore the Public Repository: Visit our Examples GitHub repository to access a variety of Jupyter Notebook examples.

Leverage Remote Kernels: Join the Datalayer Beta and start using Remote Kernels to scale your Jupyter Notebooks. Say goodbye to resource constraints and unlock the power of cloud computing for your data science needs.