Securing User Secrets

Handling secrets is one of the most critical aspects of maintaining a secure system. Secrets, such as API keys, passwords, and encryption keys, must be protected from unauthorized access and potential leaks.

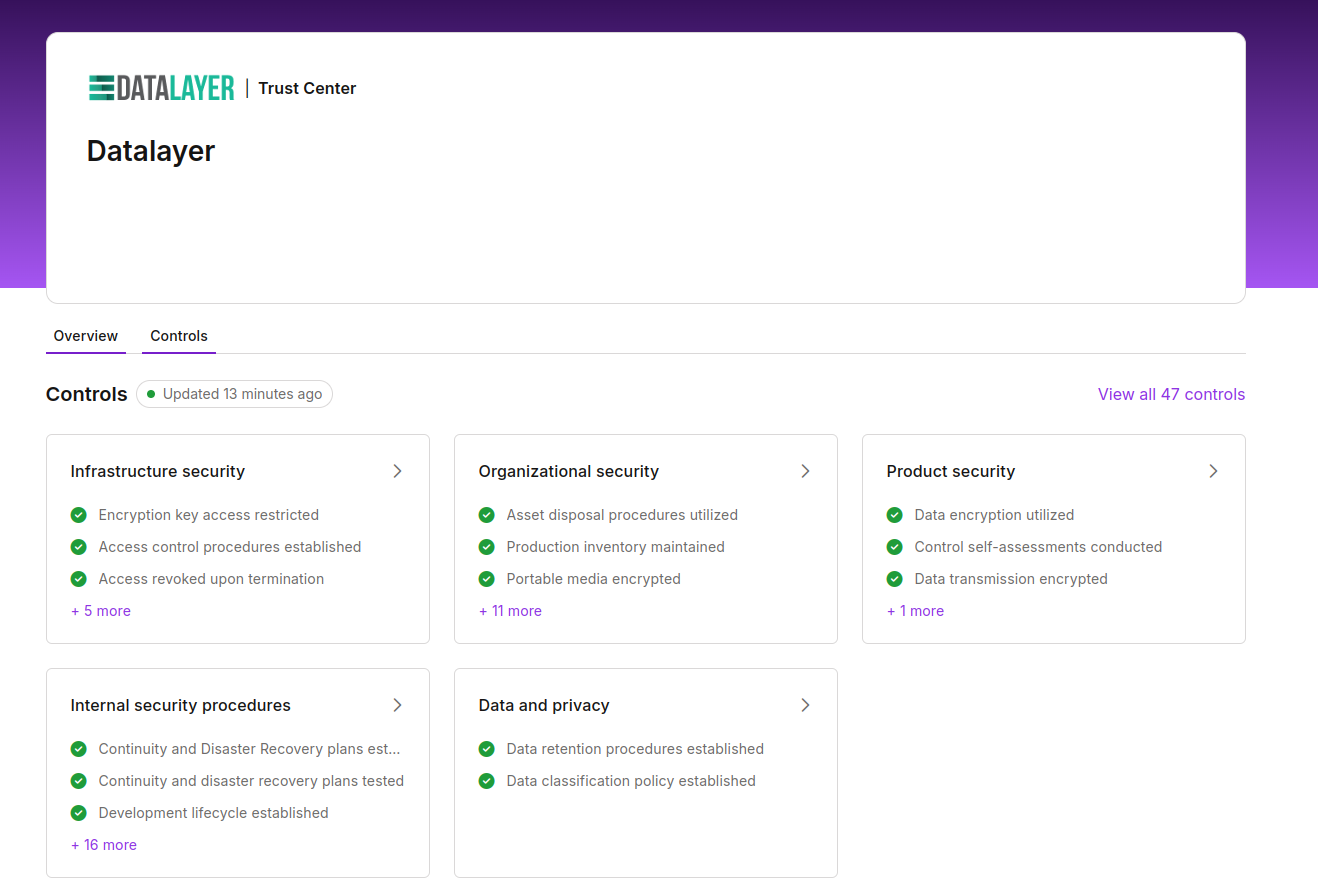

At Datalayer, we take this responsibility seriously and have implemented robust measures to ensure that secrets are handled securely and efficiently.

Why Secrets Management Matters

Secrets are often at the heart of modern cloud applications, providing access to databases, APIs, and services. However, storing these sensitive credentials in less-secure areas, such as system environments or configuration files, leaves them vulnerable to attacks. Even a single exposed secret can result in significant security breaches, data loss, and compromised systems.

To minimize these risks, it's essential to store secrets using specialized solutions designed to handle this specific challenge. These solutions ensure that secrets are properly encrypted, managed, and retrieved only when needed.

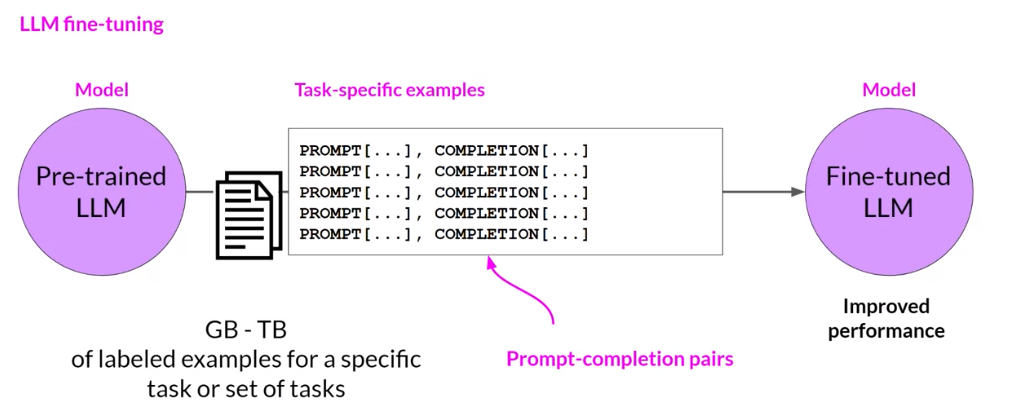

Using a Strong Vault

At Datalayer, we have integrated HashiCorp Vault to store user secrets securely. HashiCorp Vault is one of the most trusted solutions for secret management, widely used by companies like Deutsche Bank and Airbnb. Vault provides an enterprise-grade approach to secrets management, offering encryption, access control, and auditing features that ensure secrets are only accessible by authorized entities.

How It Works at Datalayer

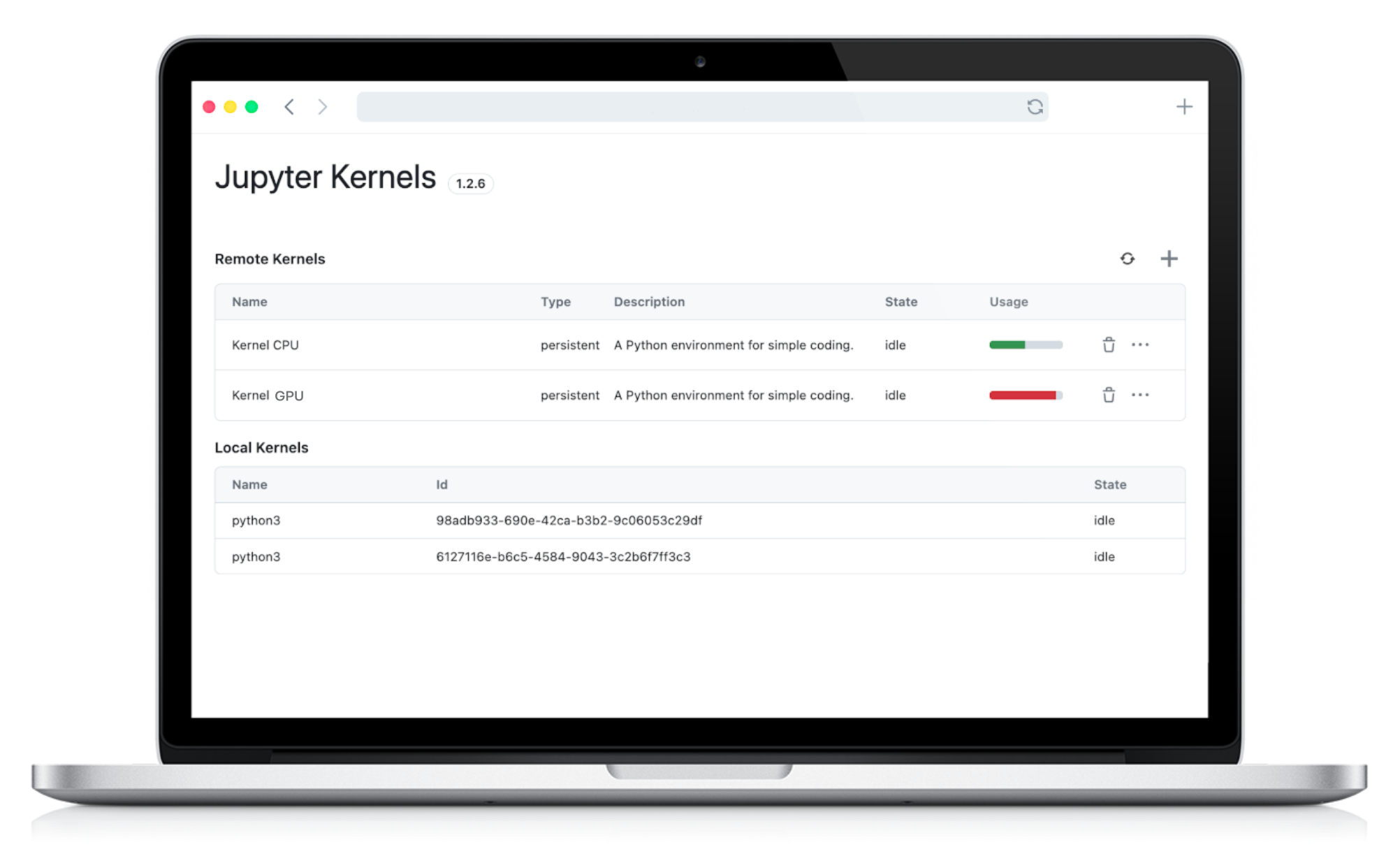

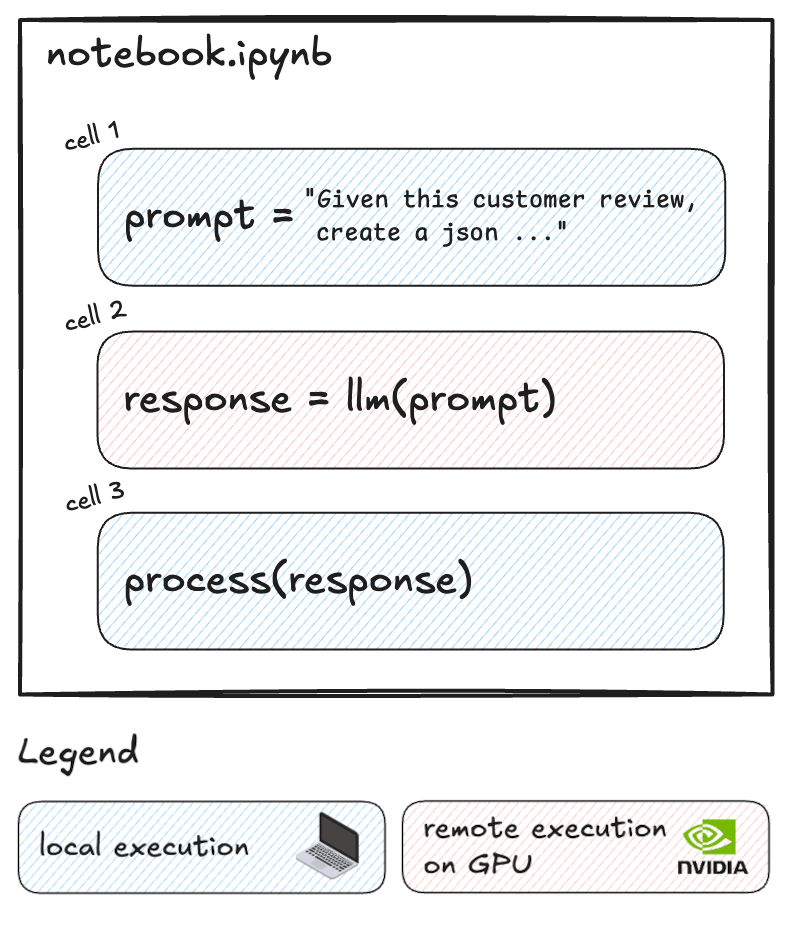

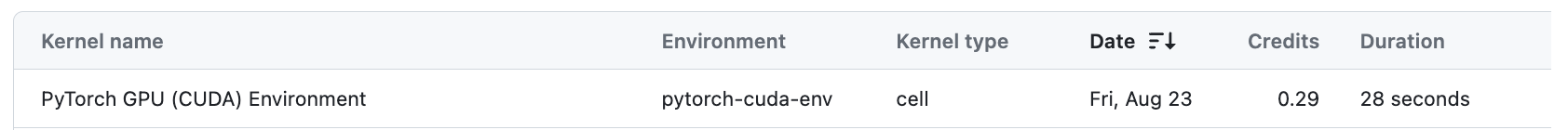

Whenever a Remote Kernel is requested, we fetch user secrets securely from the Vault and inject them into the Remote Kernel as environment variables. This approach ensures that secrets are only available to the processes that require them, reducing the risk of leaks in more exposed parts of the system, such as logs or error messages.

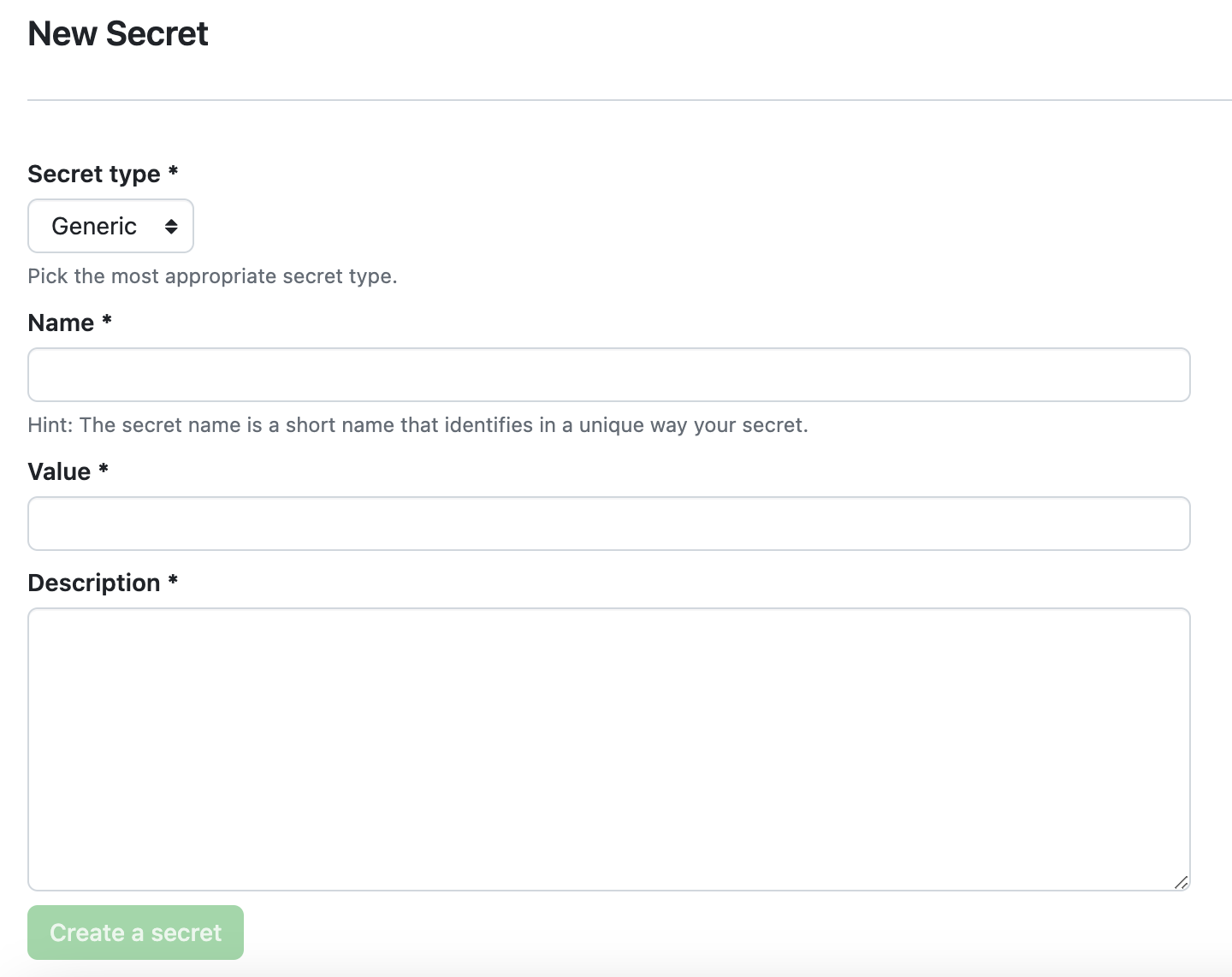

Users can define personal secrets on the platform. If they do so, the secrets will be injected in all Remote Kernels as environment variables. The environment variable name be the secret name.

The secrets are stored in the HashiCorp Vault, ensuring the highest current security standards. This requires requesting the vault each time a Remote Kernel is assigned to a user and injecting the secrets into the running kernel process as environment variables. This injection is achieved by leveraging the kernel protocol. Specifically, the companion sidecar container opens a connection to the kernel and sends a code snippet to inject the secrets.

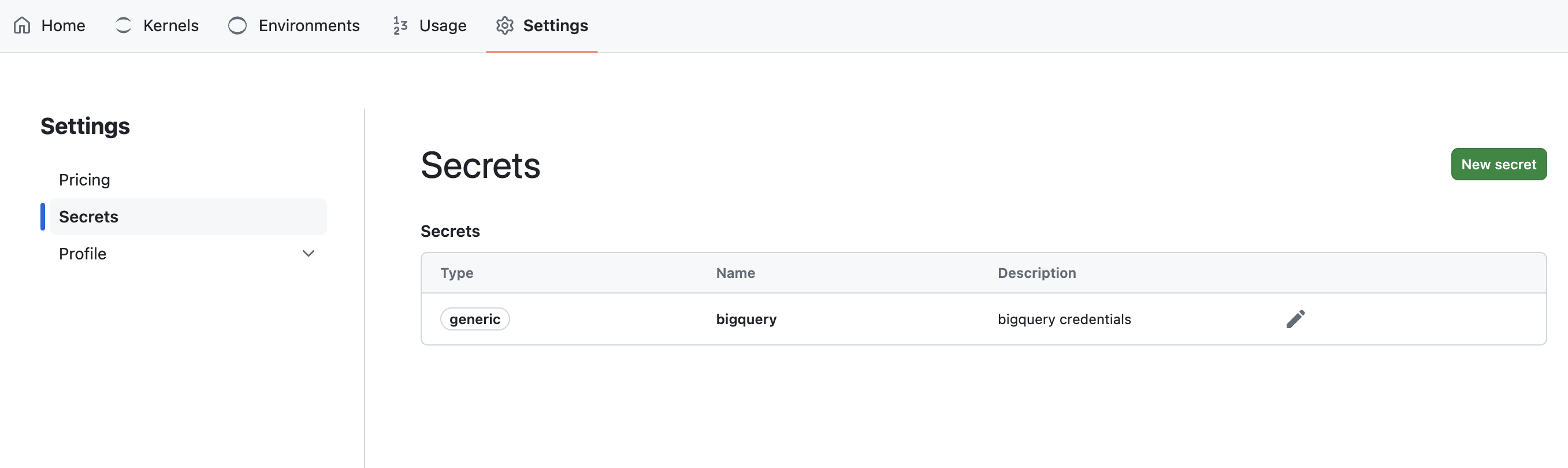

In the platform, you can now find a new section Secrets in the user settings to manage your secrets.

To learn more about how we have implemented the secrets injection in our platform, check out our technical documentation: Secrets and Env Vars Injection.

What's Next? Integrating SQL Cells and Data Sources

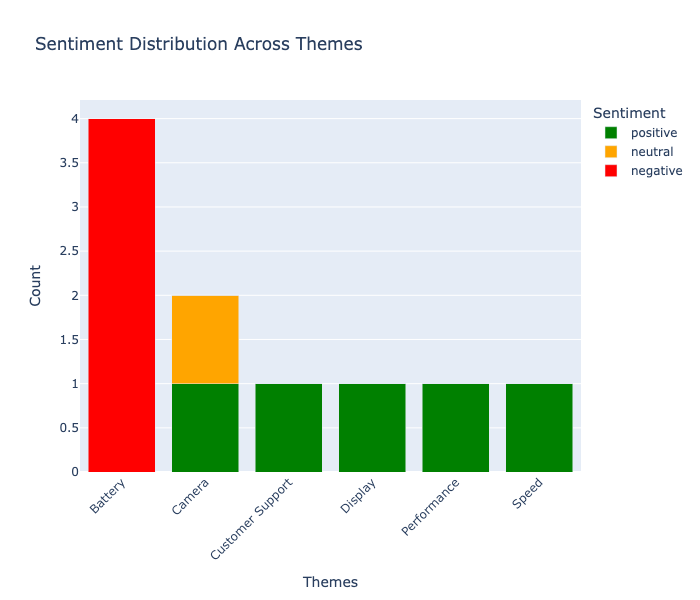

Moving forward, we are working on the next phase of improving our platform by integrating SQL cells and popular storage and databases solutions like Google BigQuery, Snowflake, Amazon Athena and more.

This will allow for greater flexibility when working with data, as users will be able to securely connect to a variety of databases and query them directly from their remote environments.

With the Vault ensuring the security of database credentials, users can focus on deriving insights from their data without worrying about security breaches or unauthorized access.

Conclusion

The protection of sensitive data is a top priority at Datalayer. With HashiCorp Vault, we ensure that user secrets are securely stored and managed, providing a safe and scalable solution for our platform.

As we continue to enhance our platform with new features like SQL cells and data source integrations, the strong foundation of security we've built with Vault will support us in delivering more powerful and secure tools for our users.